Kubernetes provides a powerful platform for managing containerized applications and dynamic resource allocation at scale. But, it also creates new challenges for managing cloud costs effectively.

With Kubernetes, organizations can quickly spin up new resources and scale resources up and down based on demand. This agility is excellent but can lead to cost overruns if not managed appropriately. FinOps provides a framework for addressing these costs effectively.

Cloud FinOps Definition:

FinOps is an evolving cloud financial management discipline and cultural practice that enables organizations to get maximum business value by helping engineering, finance, technology, and business teams to collaborate on data-driven spending decisions.

Principles of FinOps

One of the key principles of FinOps in a Kubernetes world is optimizing resource usage. Kubernetes provides features for managing resource quotas, limits, and auto scaling, which can be used to prevent over-provisioning of resources and not wasting resources that are not needed.

Another principle of FinOps in a Kubernetes world is monitoring cloud costs closely. Kubernetes provides rich monitoring that can be used to gain insights into resource utilization and identify areas to optimize resource usage and reduce cloud spending.

Finally, FinOps emphasizes the importance of collaboration between the business, finance, operations, and development teams. By working together, these teams can establish a holistic view of cloud operations and identify areas for optimization. They can also ensure that cloud investments align with business objectives.

Phases of FinOps

The FinOps Foundation recommends a three-phase approach to managing cloud spend. This approach is interactive; organizations should regularly return to the inform and optimize phases for continuous improvement.

Inform Gathering all the necessary information about cloud and Kubernetes usage and cost, making it visible and accessible to stakeholders.

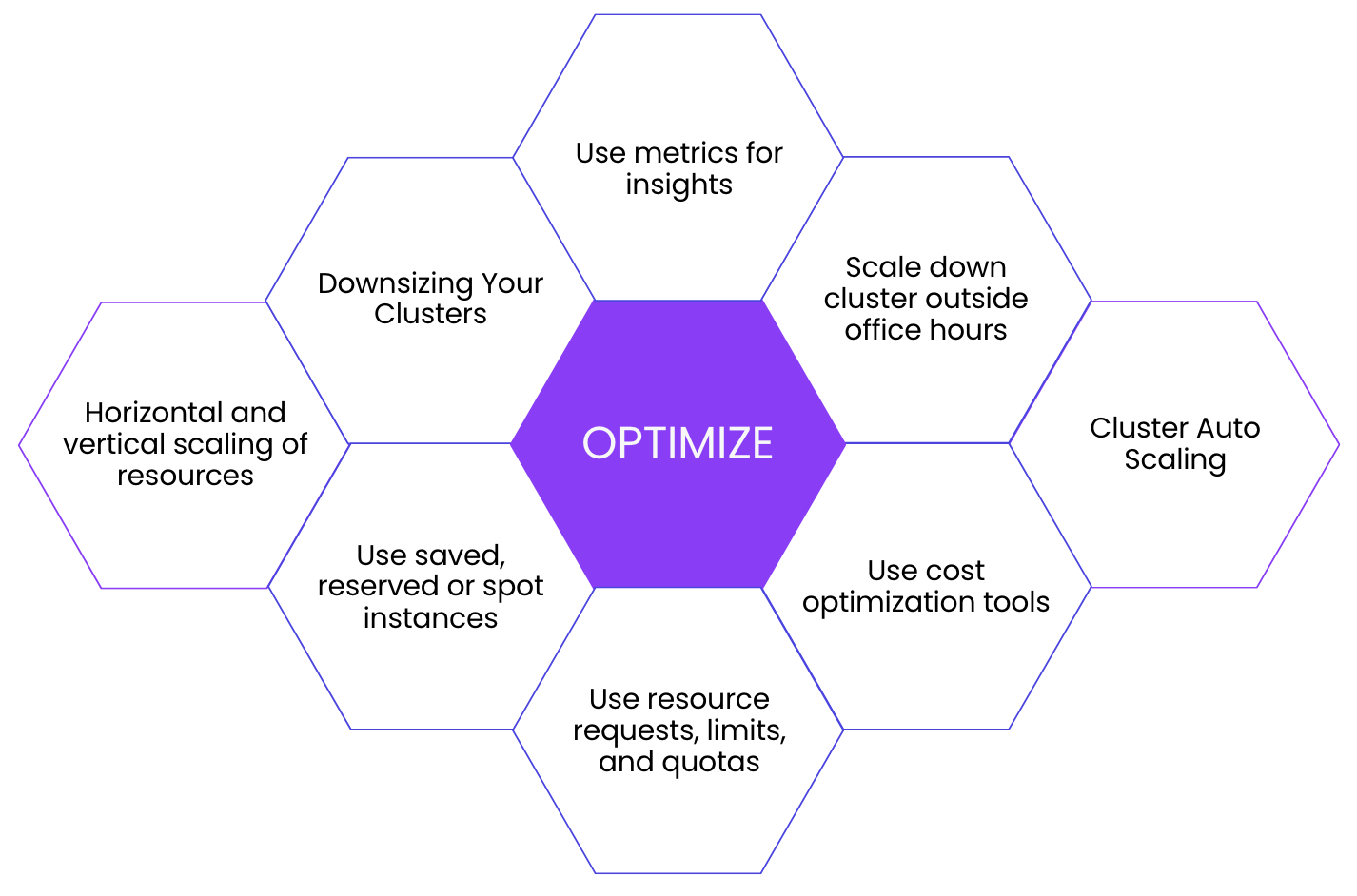

Optimize Identifying key cost-optimization drivers and providing a standardized process for optimizing cloud and Kubernetes resource consumption.

Operate Planning and improving cloud and Kubernetes consumption. Stakeholders will work together to review cloud and Kubernetes spending and make business trade-offs between speed, cost, and quality.

Ways to Optimize your Kubernetes Costs

Efficient cloud resource usage is critical for organizations leveraging Kubernetes to deploy and manage their containerized applications. Here are some Kubernetes best practices that can help organizations optimize their cloud resource usage.

Downsizing Your Clusters

Kubernetes provides rich monitoring capabilities that can be used to gain insights into resource utilization, application performance, and capacity management.

You can reduce costs by decreasing the number and size of your cluster. For example, you might delete a whole cluster or nodes within a cluster. Visualizing the utilization of Kubernetes resources helps identify and scale down unallocated resources. Cutting underutilized resources is the easiest way to cut costs.

Another way is to use a shared and multi-tenant cluster instead of a cluster per tenant. For example, see our blog post about multi-tenancy.

Besides, you can use the provider’s cost optimization tools: Cloud providers offer a variety of tools and features for optimizing cloud costs. By leveraging these tools, organizations can gain insights into their cloud costs and identify areas for optimization.

By monitoring resource usage closely, organizations can identify areas to optimize their resource usage and reduce their cloud spend.

Auto Scaling

Kubernetes provides features for horizontal and vertical scaling of resources, enabling organizations to adjust resource allocation based on application demand. For example, a cluster auto scaler lets you decide when to provision additional resources and when to terminate them. In addition, you set the minimum and maximum limits for your resource configurations, ensuring the system doesn’t accidentally scale up or down too much.

Optionally, you can scale down workloads when not used—for example, a test environment which is not used during nights or weekends.

By leveraging these scaling features, organizations can ensure that resources are allocated efficiently based on application demand.

Right Size your Workloads

Right-sizing Kubernetes workloads is important in optimizing cloud costs and efficient resource usage. One strategy for right-sizing workloads in Kubernetes is to use resource requests, limits, and quotas.

Requests indicate the amount of resources that a pod needs to run smoothly. In contrast, limits indicate the maximum amount of resources a pod can use. By setting appropriate resource requests and limits for each pod, organizations can ensure that their workloads are right-sized and use their resources effectively.

Quotas limit the amount of resources that a Kubernetes namespace can consume. By setting quotas, organizations can prevent workloads from consuming too many resources.

To determine the appropriate resource requests and limits for a Kubernetes workload, organizations can use metrics like CPU usage, memory usage, and network usage.

Purchase Cloud Resources at a Discounted Rate

When it comes to optimizing cloud costs, one strategy that organizations can use is to leverage saved or reserved instances. Both options allow organizations to purchase cloud resources at a discounted rate compared to on-demand instances:

Saved instances refer to unused instances an organization has already paid for and is not running. However, it’s important to note that saved instances are not always available and may only be suitable for some use cases.

Reserved instances, however, involve purchasing cloud resources in advance at a discounted rate. In addition, organizations can purchase reserved instances for a specified period, ranging from one to three years. As a result, reserved instances are ideal for workloads with predictable demand and usage patterns.

Another option is to use spot instances or preemptible instances:

Spot instances are a type of compute instance that can be purchased at a discounted rate compared to on-demand instances. However, there are some trade-offs to consider when using spot instances.

Spot instances are a good fit for workloads that have flexible start and end times and can tolerate interruptions. Spot instances are ideal for use cases like batch processing, data analytics, and testing environments that can run at a lower priority and do not require constant availability.

However, spot instances are not suitable for all workloads. For example, they may not be appropriate for workloads that require constant availability, such as mission-critical production workloads or real-time applications.

While saved, reserved and spot instances can be a cost-effective option for organizations, it’s important to carefully evaluate the suitability of these options for each use case.

Conclusion

In conclusion, Kubernetes and FinOps are complementary concepts that can help organizations manage their cloud resources efficiently. Kubernetes provides the capabilities for managing containerized applications at scale, while FinOps delivers a framework for managing cloud costs effectively.

By leveraging the capabilities of Kubernetes and implementing FinOps practices, organizations can optimize their cloud usage and reduce their cloud costs while maintaining application performance.